Function Calling

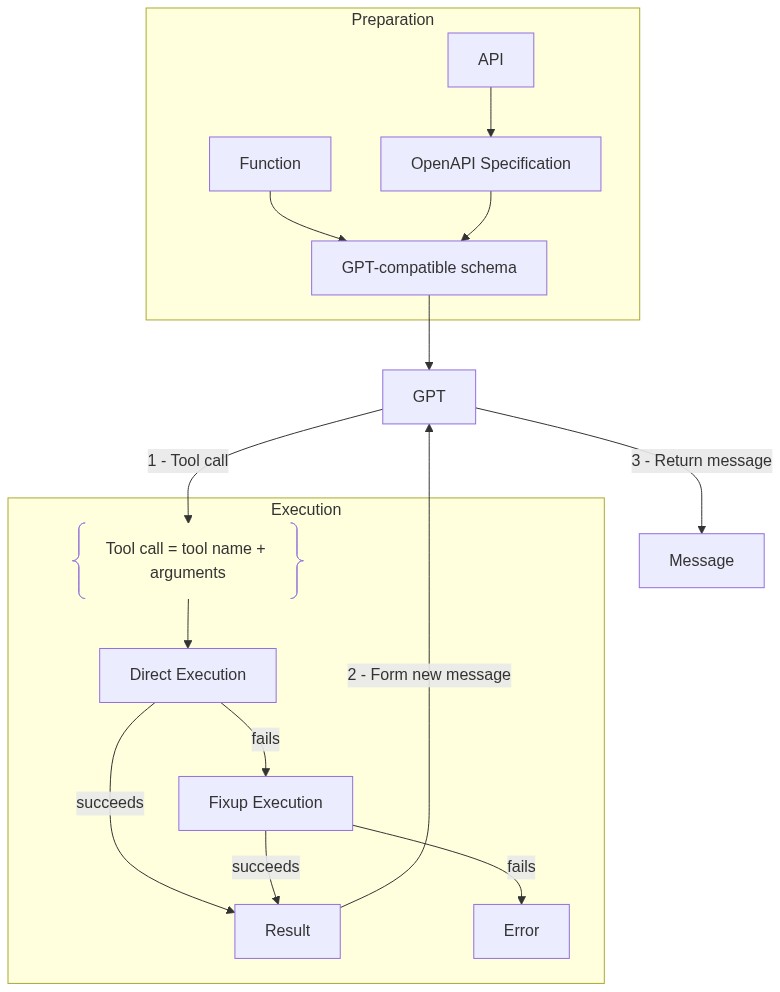

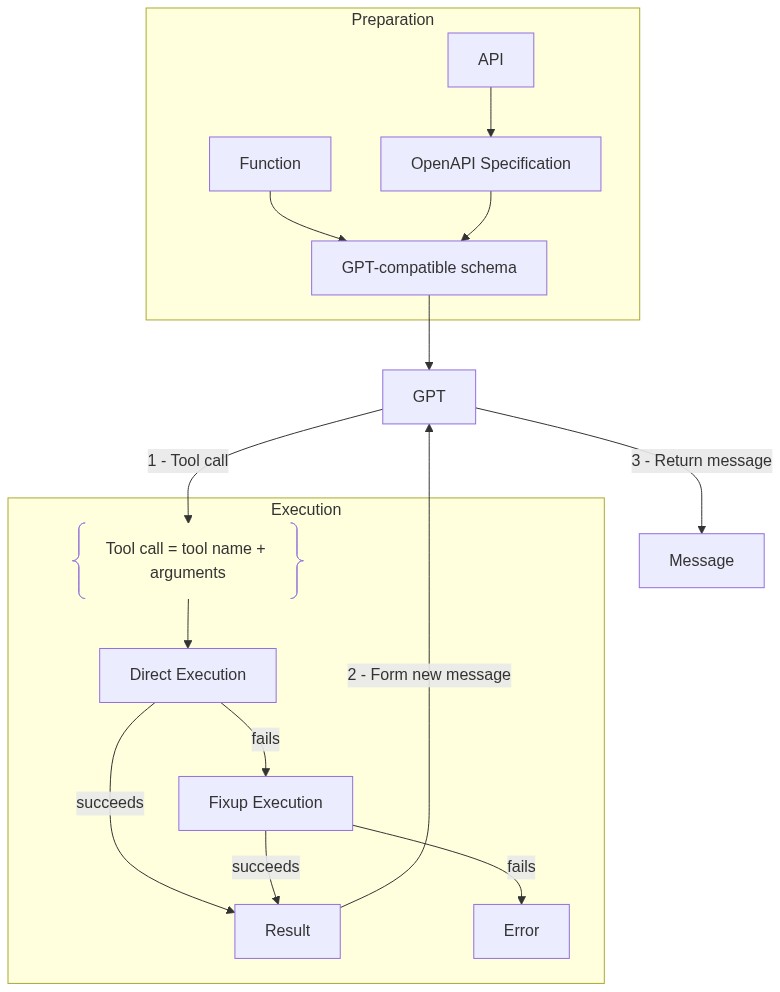

Summary of GPT Function calling process as implemented in this module:

GPT responses - Schema to Tool calls

By default, OpenAI GPT accounts for its ‘tools’ and understands them via an inputted tools schema. Based on the description in the provided schema, GPT selects appropriate tools to answer to the user’s messages. However, GPT does not execute these tools directly, but only provides a response message that contains the name and arguments for the selected tools.

An example using GPT with a tool for getting weather information:

# Define the function to get weather information

from typing import Optional

def get_weather_information(

city: str,

zip_code: Optional[str] = None,

):

return {

"city": city,

"zip_code": zip_code,

"temparature": 25,

"humidity": 80,

}# Define the tools schema with 'get_weather_information' function

tools = [{

'type': 'function',

'function': {

'name': 'get_weather_information',

'description': 'Get weather information for a given location',

'parameters': {

'type': 'object',

'properties': {

'city': {

'type': 'string',

'description': 'City name'

},

'zip_code': {

'anyOf': [

{'type': 'string'},

{'type': 'null'},

],

},

},

'required': ['city'],

},

}

}]# Generate the response using the chat API

import openai

from pprint import pprint

messages = [

{

'role': 'system',

'content': 'You can get weather information for a given location using the `get_weather_information` function',

},

{

'role': 'user',

'content': 'What is the weather in New York?',

}

]

response = openai.chat.completions.create(model="gpt-4o", messages=messages, tools=tools)

pprint(response.choices[0].message.to_dict()){'content': None,

'refusal': None,

'role': 'assistant',

'tool_calls': [{'function': {'arguments': '{"city":"New York"}',

'name': 'get_weather_information'},

'id': 'call_OM0VepmBDaPN6TbUd4P9lXur',

'type': 'function'}]}This example illustrates the raw response from GPT for a tool call and related-information that can be retrieved from it. Essentially, we can extract:

- Function name: alpha-numeric string unique for each tool in tools schema

- Arguments: Inputs to feed into the function presented in key-value pairs

Apart from function-specific information, we also obtain an ID for this tool call. This is necessary to match this tool call to its results and generate further messages, as demonstrated in the following parts.

# Get the function name and parameters from the response

tool_calls = response.choices[0].message.to_dict()['tool_calls']

function_name = tool_calls[0]['function']['name']

function_parameters = tool_calls[0]['function']['arguments']

tool_call_id = tool_calls[0]['id']

function_name, function_parameters, tool_call_id('get_weather_information',

'{"city":"New York"}',

'call_OM0VepmBDaPN6TbUd4P9lXur')# Call the function with the parameters

import json

function = globals()[function_name]

results = function(**json.loads(function_parameters))

pprint(results){'city': 'New York', 'humidity': 80, 'temparature': 25, 'zip_code': None}We can continue the previous conversation by adding both the tool call initiated by GPT and the results of such tool call:

messages.append(response.choices[0].message.to_dict())

messages.append({

'role': 'tool',

'content': json.dumps({

**json.loads(function_parameters),

function_name: results,

}),

'tool_call_id': tool_call_id,

})response = openai.chat.completions.create(model="gpt-4o", messages=messages, tools=tools)

pprint(response.choices[0].message.to_dict()){'content': 'The current weather in New York is 25°C with a humidity level of '

'80%.',

'refusal': None,

'role': 'assistant'}This overall workflow should be the guideline for us to implement the function to execute function calling by following its steps:

- Generate tool call(s) with GPT response

- Add tool call to conversation (

messages) - Execute tool call in system with the given name and arguments

- Add results of tool call with corresponding ID to conversation

- Re-generate message with the updated conversation

Execute tool call in System / Global environment

In the above workflow, the actual function can be retrieved from global environment via its name. However, this approach is not appropriate for functions imported from other modules or API requests. Therefore, we can consider dynamic imports / function extraction and wrapper functions. These can be managed in data defined in higher levels of tools schema - metadata.

Dynamic imports

We can dynamically import functions and modules with importlib. To achieve this, we need module source as string combined with function name. For example, the function get_weather_information defined locally can be imported from module __main__. Meanwhile, show_doc function can be dynamically imported from module nbdev.showdoc.

import importlib

# Example of dynamically calling the 'get_weather_information' function

main_module = importlib.import_module('__main__')

weather_function = getattr(main_module, 'get_weather_information')

weather_function(**{'city': 'New York'}){'city': 'New York', 'zip_code': None, 'temparature': 25, 'humidity': 80}# Example of dynamically calling the 'show_doc' function

showdoc_module = importlib.import_module('nbdev.showdoc')

showdoc_function = getattr(showdoc_module, 'show_doc')

showdoc_function(weather_function)get_weather_information

get_weather_information (city:str, zip_code:Optional[str]=None)

Wrapper function

In case the main function fails, we can execute resort to a wrapper function that also takes in high-level data. This should be useful for any scenarios that require additional information to execute or specific steps (e.g., API requests). For system design purpose, these fixup functions should include the following parameters:

- Function name: Positional, required paramater

- Metadata parameters: Any high-level data as optional keyword arguments

- Function parameters: Function arguments provided by GPT as optional keyword arguments

High-level data in tools schema

High-level data should be stored as simple JSON-formatted data in tools schema such that it does not interfere with GPT argument-generating process. A suitable structure for this would be adding these information as properties in the function object of schema:

metadata: JSON-formatted data for any additional information

fixup: Name and module source of fixup function

Example of tools schema with high-level data:

# Define the tools schema high-level information

tools = [{

'type': 'function',

'function': {

'name': 'get_weather_information',

'description': 'Get weather information for a given location',

'parameters': {

'type': 'object',

'properties': {

'city': {

'type': 'string',

'description': 'City name'

},

'zip_code': {

'anyOf': [

{'type': 'string'},

{'type': 'null'},

],

},

},

'required': ['city'],

},

# Extra high-level information

'metadata': {

'module': '__main__', # Module name

},

'fixup': 'fixup.module.function', # Fixup function

}

}]Utilities function for managing messages

This section implements some basic utilities for forming and printing messages to suitable formats used in conversations.

form_msg

form_msg (role:Literal['system','user','assistant','tool'], content:str, tool_call_id:Optional[str]=None)

Create a message for the conversation

| Type | Default | Details | |

|---|---|---|---|

| role | Literal | The role of the message sender | |

| content | str | The content of the message | |

| tool_call_id | Optional | None | The ID of the tool call (if role == “tool”) |

form_msgs

form_msgs (msgs:list[tuple[typing.Literal['system','user','assistant'],s tr]])

Form a list of messages for the conversation

| Type | Details | |

|---|---|---|

| msgs | list | The list of messages to form in tuples of role and content |

print_msg

print_msg (msg:dict)

Print a message with role and content

| Type | Details | |

|---|---|---|

| msg | dict | The message to print with role and content |

print_msgs

print_msgs (msgs:list[dict], with_tool:bool=False)

| Type | Default | Details | |

|---|---|---|---|

| msgs | list | The list of messages to print with role and content | |

| with_tool | bool | False | Whether to print tool messages |

Modularized execution function

This section implements the described FC workflow in a thorough execution function.

fn_result_content

fn_result_content (call, tools=[])

Create a content containing the result of the function call

fn_exec

fn_exec (call, tools=[])

Execute the function call

fn_metadata

fn_metadata (tool)

fn_args

fn_args (call)

fn_name

fn_name (call)

complete

complete (messages:list[dict], tools:list[dict]=[])

Complete the conversation with the given message

| Type | Default | Details | |

|---|---|---|---|

| messages | list | The list of messages | |

| tools | list | [] | The list of tools |

| Returns | tuple | The role and content of the last message |

Test with the previously demonstrated example - using the get_weather_information function and updated tools with metadata containing the module:

messages = form_msgs([

("system", "You can get weather information for a given location using the `get_weather_information` function"),

("user", "What is the weather in New York?")

])

complete(messages, tools=tools)

print_msgs(messages, with_tool=True)>> System:

You can get weather information for a given location using the `get_weather_information` function

>> User:

What is the weather in New York?

>> Assistant:

{'content': None, 'refusal': None, 'role': 'assistant', 'tool_calls': [{'id': 'call_D819bD5iC06S3OmMGUIjuZi9', 'function': {'arguments': '{"city":"New York"}', 'name': 'get_weather_information'}, 'type': 'function'}]}

>> Tool:

{"city": "New York", "get_weather_information": {"city": "New York", "zip_code": null,

"temparature": 25, "humidity": 80}}

>> Assistant:

The current weather in New York is 25°C with a humidity level of 80%.