Building a Real-Time Face Identification System: A Comprehensive Guide

In today’s world, face identification systems are becoming increasingly crucial in various applications, from security and surveillance to personalized customer experiences. Building a real-time face identification system can seem daunting, but with the right tools and approaches, it becomes manageable. In this article, we will walk you through a project to develop such a system, using some of the best tools available, including Ultralytics YOLOv8, managed cloud services (e.g. AWS Rekognition and Azure Face API), and more. We’ll also compare different technologies to help you choose the best one for your needs.

Step 1: Understanding the Core Requirements

Before diving into the development, it’s essential to outline the core requirements of your face identification system:

- Real-Time Performance: The system must detect and identify faces with minimal latency.

- Accuracy: High accuracy in face detection and recognition is crucial, especially in security applications.

- Scalability: Depending on the deployment environment, the system might need to scale to handle multiple video feeds simultaneously.

- Ease of Integration: The system should easily integrate with other components, such as databases and user management systems.

Step 2: Choosing the Right Technology Stack

Choosing the appropriate tools and technologies is critical to meeting the above requirements. Here’s a comparison of the available options:

| Service/Framework | Features | Object Detection | On-Site Deployment | Scalability | Best For | Cons |

|---|---|---|---|---|---|---|

| AWS Rekognition | Face detection, recognition, emotion analysis | Yes | No | High (cloud-native, scalable across AWS infrastructure) | Large-scale AWS users, cloud-first deployments | Cost, no native on-site solution |

| Azure Face API | Face detection, attributes, emotion recognition | Yes | Yes (via containers) | High (scales across Azure cloud and on-site with containers) | Microsoft ecosystem, on-site deployment via containers | Cost, limited customization |

| Google Cloud Vision | Face detection, image classification | Yes | No | High (scales across Google Cloud infrastructure) | Broad vision needs, Google Cloud | Limited face recognition features, no on-site solution |

| IBM Watson | Face detection, customizable models | Yes | Yes | High (scales with IBM cloud or on-premise solutions) | Privacy-conscious industries, on-site solutions | Complexity, cost |

| Face++ | Face detection, beauty score, emotion analysis | Yes | Yes | Moderate (scales well but on-site deployment requires more effort) | Fast, high-accuracy applications, on-site solutions | Privacy concerns, on-site deployment can be complex |

| Clarifai | Face detection, customizable models | Yes | Yes (via on-site SDK) | Moderate (scales well in the cloud, SDK can be scaled on-premises) | Rapid prototyping, easy integration, on-site deployment | Cost, potential latency in cloud deployments |

| Ultralytics YOLOv8 | Real-time face detection, fully customizable models | Yes | Yes | High (scales based on deployment environment, requires management) | Developers seeking control, on-site deployment, flexibility | Requires setup and management |

Each of these tools has strengths and weaknesses depending on your project’s needs.

Step 3: Implementing with Ultralytics YOLOv8

We’ll focus on using Ultralytics YOLOv8, given its balance between real-time performance and customization. YOLOv8 is well-suited for applications requiring high-speed detection and the flexibility to train on custom datasets.

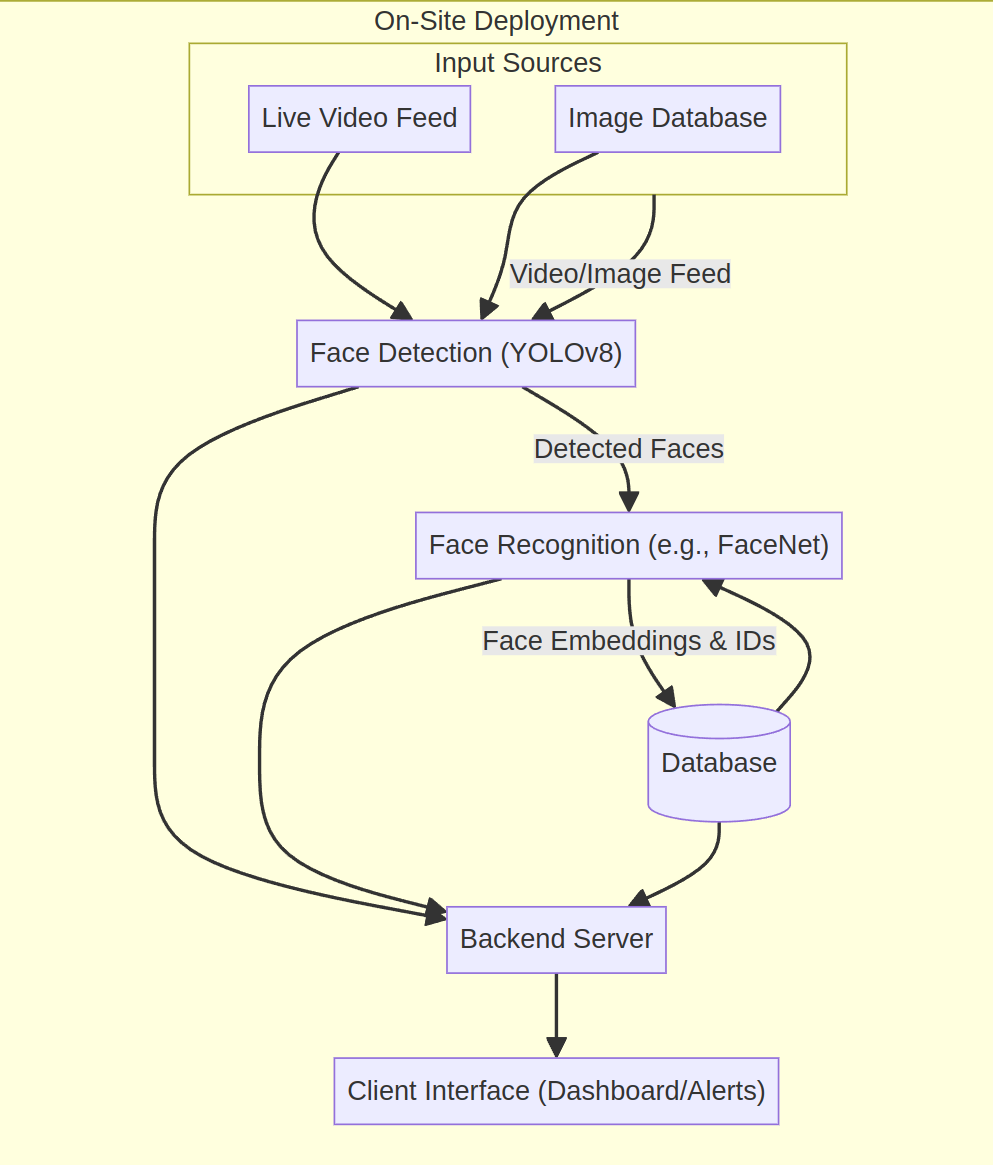

The real-time face identification system architecture integrates Ultralytics YOLOv8 for face detection with a face recognition model (like FaceNet) for identifying individuals. The system processes live video feeds or stored images through YOLOv8, which detects faces and outputs bounding boxes. These are then passed to a backend server that crops the face images and sends them to the face recognition module. This module generates facial embeddings, compares them against a database of known identities, and identifies the person. The results are displayed in real-time on a client interface, such as a dashboard or alert system.

This architecture can be deployed on-site, ensuring data privacy and low latency, and is designed to scale by adding processing nodes as needed, making it suitable for security, access control, and personalized services.

Setting Up YOLOv8

Install the Ultralytics Package: Begin by installing the necessary software using pip:

pip install ultralyticsLoad the YOLOv8 Model: Load a pre-trained YOLOv8 model or start with a custom model:

from ultralytics import YOLO # Load a YOLOv8 model model = YOLO('yolov8n.pt') # Perform inference on an image results = model('path/to/your/image.jpg') results.show() # Display results

Training on a Custom Dataset

To fine-tune YOLOv8 for face detection, you need a dataset of face images with annotations in YOLO format. You can use public datasets like WIDER FACE or CelebA.

Prepare Your Dataset: Ensure your dataset is properly annotated and structured.

Configure and Train the Model: Train YOLOv8 using your dataset:

model = YOLO('yolov8n.pt') model.train(data='path/to/data.yaml', epochs=100, imgsz=640, batch=16)

Deploying for Real-Time Face Detection

Once trained, you can deploy YOLOv8 for real-time face detection. This can be done by feeding live video streams into the model and processing each frame.

import cv2

from ultralytics import YOLO

# Load the trained model

model = YOLO('runs/train/exp/weights/best.pt')

# Capture video from webcam

cap = cv2.VideoCapture(0)

while cap.isOpened():

ret, frame = cap.read()

if not ret:

break

# Perform inference

results = model(frame)

# Display results

results.show()

if cv2.waitKey(1) & 0xFF == ord('q'):

break

cap.release()

cv2.destroyAllWindows()Step 4: Integrating Face Recognition

While YOLOv8 handles face detection, face identification (recognition) requires another model. Popular options include FaceNet or ArcFace. After detecting faces, crop the face regions and pass them to a recognition model.

# Example of integrating face recognition after detection

for result in results.xyxy[0]:

x1, y1, x2, y2, conf, cls = result

face_img = frame[int(y1):int(y2), int(x1):int(x2)]

# Pass the cropped face to your recognition model

face_id = recognize_face(face_img) # This function would use a recognition model like FaceNetStep 5: Simple Streamlit UI

Just an example

import cv2

from ultralytics import YOLO

import streamlit as st

# Load YOLOv8 model for face detection

model = YOLO('yolov8n.pt')

# Streamlit UI setup

st.title("Real-Time Face Detection")

st.text("Streaming live video with YOLOv8 face detection")

# `RTSP` setup (You can use a local webcam or an RTSP stream)

# Example: 'rtsp://username:password@IP_ADDRESS:PORT/stream1'

rtsp_url = st.text_input("Enter RTSP URL:", "rtsp://username:password@IP_ADDRESS:PORT/stream1")

# Start the video capture from RTSP or webcam

cap = cv2.VideoCapture(rtsp_url)

# Streamlit video display

frame_display = st.empty()

while cap.isOpened():

ret, frame = cap.read()

if not ret:

st.write("Failed to retrieve frame. Exiting...")

break

# Perform face detection using YOLOv8

results = model(frame)

# Draw bounding boxes on the frame

for result in results.xyxy[0]:

x1, y1, x2, y2, conf, cls = result

cv2.rectangle(frame, (int(x1), int(y1)), (int(x2), int(y2)), (0, 255, 0), 2)

# Convert the frame to RGB (for Streamlit)

frame = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

# Display the frame in Streamlit

frame_display.image(frame, channels="RGB")

# Stop the stream with a button

if st.button("Stop Stream"):

break

# Release the video capture

cap.release()Step 6: Evaluating Alternatives

If your project doesn’t require the flexibility of YOLOv8 or you want to offload the complexity of training and deployment, consider managed services like AWS Rekognition or Azure Face API. These services are easy to integrate and scale automatically but come at the cost of flexibility and higher operational expenses.

- AWS Rekognition: Ideal for applications deeply integrated with AWS, offering face detection, recognition, and emotion analysis.

- Azure Face API: Best for users within the Microsoft ecosystem, offering advanced facial attribute analysis.

- Google Cloud Vision: Suitable for broader computer vision needs beyond face detection.

- IBM Watson: Provides strong privacy features, suitable for industries with strict data regulations.

- Face++: Offers high accuracy and additional features like beauty scoring, though with potential privacy concerns.

Step 7: Computational resources

To determine how many rack-mount servers you’d need for a face identification system involving 200 cameras using GPUs like the NVIDIA RTX 3080, 3090, or 4090, we need to consider several factors:

Key Factors:

- GPU is superiour to CPU at inference from cost vs perf comparison.

- Processing Power: Each GPU’s ability to handle a specific number of frames per second (FPS).

- Total FPS Requirement: The total computational load imposed by the 200 cameras.

- Rack-Mount Server Specifications: The number of GPUs each server can support.

Calculating Total FPS Requirement:

- 200 Cameras at 30 FPS each:

- Total FPS required = 200 cameras * 30 FPS = 6000 FPS.

GPU Capacity:

- NVIDIA RTX 3080: Can handle approximately 400 FPS for YOLOv8-tiny and around 80-100 FPS for standard YOLOv8.

- NVIDIA RTX 3090: Can handle approximately 600 FPS for YOLOv8-tiny and around 120-150 FPS for standard YOLOv8.

- NVIDIA RTX 4090: Can handle approximately 1000 FPS for YOLOv8-tiny and around 200-250 FPS for standard YOLOv8.

Example Server Configurations:

- Supermicro 4U GPU Server (SYS-4029GP-TRT):

- Supports up to 4 GPUs.

- If each server uses 4x RTX 4090 GPUs, the total processing capability per server would be:

- YOLOv8-tiny: 4000 FPS (4 GPUs * 1000 FPS).

- Standard YOLOv8: 800-1000 FPS (4 GPUs * 200-250 FPS).

- ASUS ESC8000A-E11:

- Supports up to 8 GPUs.

- With 8x RTX 4090 GPUs:

- YOLOv8-tiny: 8000 FPS (8 GPUs * 1000 FPS).

- Standard YOLOv8: 1600-2000 FPS (8 GPUs * 200-250 FPS).

Number of Servers Needed:

- For YOLOv8-tiny:

- With Supermicro 4U Server: 1.5 servers (round up to 2 servers).

- With ASUS ESC8000A-E11: 1 server is sufficient.

- For Standard YOLOv8:

- With Supermicro 4U Server: 6-8 servers.

- With ASUS ESC8000A-E11: 3-4 servers.

SUMMARY

- YOLOv8-tiny: You would need 1-2 servers (depending on GPU configuration) to handle the 200 cameras.

- Standard YOLOv8: You would need 3-8 servers depending on the specific GPU and server model you choose.

These estimates assume maximum utilization of each GPU’s capabilities, and the actual number might vary based on real-world conditions, such as CPU bottlenecks, memory limitations, and other factors.

Step 8: Storage Requirement

This outlines the storage requirements for recording video from 200 cameras with resolutions of 2MP and 4MP, continuously over a 30-day period. The calculations take into account various factors such as bitrate, frame rate, and compression method.

Key Parameters

- Resolution:

- 2MP (1080p): Approximately 3 Mbps

- 4MP: Approximately 6 Mbps

- Frame Rate: 30 frames per second (FPS)

- Recording Time: 24 hours per day, 30 days per month

- Number of Cameras: 200

- Compression: H.264 assumed (H.265 could reduce storage by up to 50%)

Storage Calculation

The total storage required can be calculated using the following equation:

\[ \text{Total Storage (TB)} = \left(\frac{\text{Bitrate (Mbps)} \times 86,400 \times 30 \times \text{Number of Cameras}}{8 \times 1024^2}\right) \]

Where:

- 86,400: Number of seconds in a day

- 30: Number of days in a month

- 8: Conversion factor from bits to bytes

- 1024^2: Conversion from MB to TB

Results

- For 2MP Cameras (3 Mbps):

- Total Storage: Approximately 185.2 TB per month.

- For 4MP Cameras (6 Mbps):

- Total Storage: Approximately 370.5 TB per month.

Considerations

- Compression: Using H.265 compression could potentially reduce the storage requirement by 50%.

- Motion Detection: If motion detection is used instead of continuous recording, the required storage could be significantly less.

SUMMARY

For 200 cameras recording continuously at 2-4MP resolution, the storage requirement ranges from approximately 185.2 TB to 370.5 TB per month. Adjustments to compression methods, frame rates, or recording strategies could alter these estimates.

Conclusion

Building a real-time face identification system requires careful selection of tools and technologies based on your specific requirements. Ultralytics YOLOv8 offers a powerful, customizable solution for developers who need control over the face detection process, while managed services like AWS Rekognition and Azure Face API provide easier, scalable alternatives for those looking to avoid the complexities of model training and deployment.

Choosing the right stack involves balancing performance, flexibility, and ease of use. With the tools discussed in this guide, you’re well-equipped to build a robust, real-time face identification system tailored to your needs.