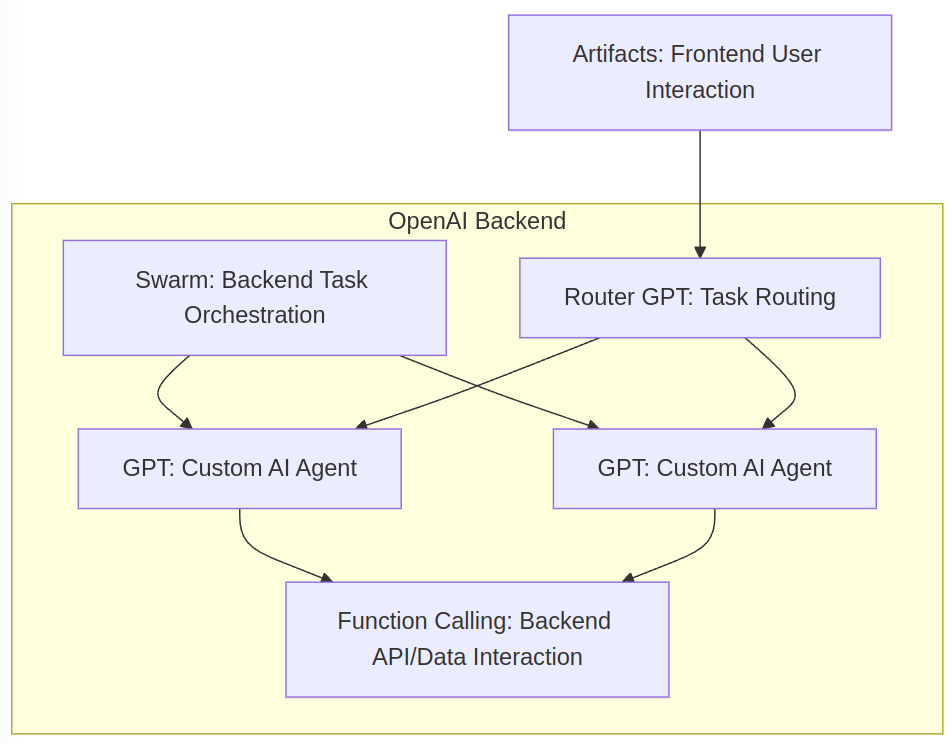

How to Build an LLM Web Service Effortlessly with OpenAI Swarm, GPTs, Function Calling, and Anthropic Artifacts

Building an LLM-based web service can be a complex process, requiring you to balance scalability, flexibility, real-time data handling, and user interaction. But by using the right combination of tools like OpenAI’s Swarm, GPTs, Function Calling, and Anthropic’s Artifacts, you can make this process much more efficient and manageable.

In this post, we’ll walk you through how to create a scalable and dynamic LLM web service by integrating these technologies and show you how to build a powerful solution with minimal effort.

Step 1: Define Your Web Service’s Key Functions

Before diving into the technical tools, clearly define what your web service should do. Consider questions like: - Will users interact with your AI through uploaded files, documents, or data inputs? - Does the web service need to fetch real-time data (e.g., weather, stock prices)? - How many different tasks (e.g., summarization, content generation, user support) will the service handle?

Once you have a clear vision of the key functions, you can start integrating the right tools.

Step 2: Set Up Your GPTs for Customizable AI Interactions

GPTs provide a flexible, no-code way to create AI models tailored to specific tasks. In your web service, you can use different GPTs to handle various user interactions.

Example:

- A customer support GPT can answer user queries, retrieve FAQs, or handle common troubleshooting requests.

- A content generation GPT can be set up to create blog posts, marketing materials, or other forms of content based on user inputs.

Why GPTs? - No-code setup: You can easily configure GPTs to handle specific tasks without writing extensive code. - Customizable: Each GPT can be tailored to a different business need or function.

By using GPTs, you can quickly develop AI models that are perfectly suited to the needs of your web service.

Step 3: Add Function Calling for Real-Time Data Integration

When your service needs to interact with external data sources, Function Calling enables GPTs to fetch real-time information or execute specific functions. This is particularly useful when you need dynamic, up-to-date information.

Example:

- A user asks for the latest stock price or current weather. The GPT can call an external API, fetch the data, and return the response to the user in real-time.

- A user needs to schedule a meeting or retrieve information from an internal database. Function Calling allows the GPT to integrate with your calendar API or database.

Why Function Calling? - Real-time capabilities: GPTs can retrieve and process live data, making your web service more dynamic and responsive. - External integrations: Easily connect your GPTs to third-party APIs for deeper functionality.

Step 4: Use Swarm to Orchestrate and Scale Your Web Service

Swarm is the engine that will dynamically orchestrate multiple GPT agents, allowing your web service to handle numerous tasks simultaneously while balancing the load across agents.

Example:

- When your web service has multiple users submitting requests (e.g., data analysis, customer support queries, real-time data fetches), Swarm ensures that tasks are distributed among different GPTs.

- Swarm automatically manages task flow, making sure that no single GPT is overwhelmed, and ensures the service remains fast and efficient under heavy load.

Why Swarm? - Efficient orchestration: Swarm dynamically assigns tasks to GPT agents based on current workloads, ensuring that tasks are processed in parallel for greater efficiency. - Scalability: As your web service grows, Swarm allows you to add more GPT agents, effortlessly scaling the service without performance bottlenecks.

Step 5: Enable Frontend Interaction with Anthropic’s Artifacts

Artifacts, from Anthropic, provides a user-friendly way for frontend interaction where users can upload documents, images, or other data that GPTs can analyze.

Example:

- Users can upload a PDF document or image that needs to be summarized, analyzed, or processed. Artifacts manage these files, and GPTs process the data and return insights or summaries directly to the user.

- Artifacts also act as a bridge for managing structured and unstructured data, enabling GPTs to retrieve or store user-uploaded data for ongoing workflows.

Why Artifacts? - Frontend data management: Artifacts provide a seamless way for users to interact with your web service by uploading and managing files or documents. - File processing: GPTs can handle uploaded data, analyze it, and provide insights, adding a powerful interactive element to your web service.

Step 6: Integrate a Router GPT for Efficient Task Management

Adding a Router GPT can make your service more intelligent by routing different tasks to the appropriate GPT agents or functions. This helps streamline task management, ensuring that the right GPT handles each user request.

Example:

- When a user uploads a document, the Router GPT directs it to the summarization GPT.

- If the user asks for real-time information, the Router GPT automatically triggers Function Calling to fetch data from an external API.

Why Router GPT? - Smart task routing: It optimizes your service by ensuring each task goes to the most appropriate GPT, enhancing performance and user experience.

Step 7: Test, Monitor, and Scale Your Web Service

Now that you’ve set up the components, it’s important to test the web service to ensure smooth functionality. Monitor the service to check for bottlenecks or performance issues and make adjustments where necessary.

- Scalability: Thanks to Swarm, you can easily scale your web service by adding more GPT agents when demand increases.

- Monitoring and feedback: Regularly monitor how the Router GPT manages tasks and whether Function Calling is retrieving data correctly. Use feedback loops to fine-tune the GPTs and improve response times.

Final Thoughts

By combining OpenAI’s Swarm, GPTs, Function Calling, and Anthropic Artifacts, you can build a sophisticated LLM web service that is scalable, interactive, and dynamic, all while keeping the development process simple and efficient.

This modular approach ensures that your web service can grow as your needs evolve—whether it’s handling more users, adding new AI-driven features, or integrating external data sources.

Ready to build? Start leveraging these powerful tools today to bring your LLM web service to life with minimal effort.

Explore the Tools:

- OpenAI GPTs

- Function Calling

- Swarm

- Anthropic Artifacts