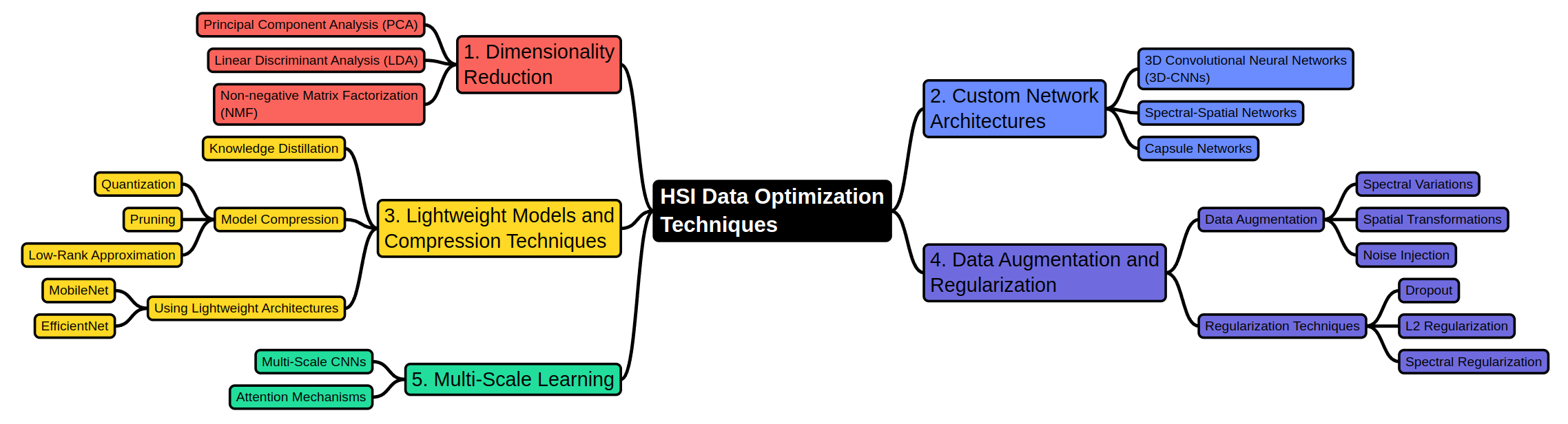

Optimizing a DNN Model for Processing Hyperspectral Imaging (HSI) Data

Introduction

Hyperspectral Imaging (HSI) is a powerful technique that captures a wide spectrum of light across dozens to hundreds of narrow wavelength bands. Unlike traditional RGB imaging, which only captures three broad bands of red, green, and blue, HSI provides rich spectral information for each pixel, enabling detailed analysis of the material properties, composition, and other characteristics of the observed objects.

However, this richness comes at a cost: HSI data is massive and complex, requiring sophisticated processing techniques to extract useful information. Deep Neural Networks (DNNs) are a natural choice for processing such data due to their ability to model complex patterns and relationships. But given the high dimensionality and large data volumes inherent to HSI, optimizing DNNs for this task is crucial to ensure efficiency and effectiveness. This article explores the key strategies for optimizing DNN models tailored for HSI data.

1. Dimensionality Reduction

HSI datasets often contain hundreds of spectral bands, leading to high-dimensional data that can be computationally expensive to process and prone to overfitting. Dimensionality reduction techniques help mitigate these issues by reducing the number of input features while preserving the essential information.

Principal Component Analysis (PCA): PCA is widely used to reduce the dimensionality of HSI data by transforming it into a set of orthogonal components that capture the most variance in the data. This reduces the input size for DNNs, making the models more manageable and less computationally intensive.

Linear Discriminant Analysis (LDA): LDA is particularly useful in supervised learning tasks, as it maximizes class separability by finding the linear combinations of features that best separate the classes. This helps in focusing the DNN on the most discriminative features.

Non-negative Matrix Factorization (NMF): NMF decomposes the data into non-negative components, making it particularly suitable for HSI data, which often consists of non-negative values. This method not only reduces dimensionality but also facilitates interpretability, as the resulting components often correspond to physically meaningful spectra.

2. Custom Network Architectures

Given the unique characteristics of HSI data, custom DNN architectures can be designed to exploit both the spectral and spatial dimensions effectively.

3D Convolutional Neural Networks (3D-CNNs): Unlike traditional 2D CNNs used for RGB images, 3D-CNNs process data across three dimensions: height, width, and spectral depth. This allows the model to simultaneously capture spatial patterns and spectral features, making it ideal for HSI data.

Spectral-Spatial Networks: These networks separately handle spectral and spatial features before combining them. For example, 1D CNNs can be used to process spectral data, while 2D CNNs handle spatial data. This approach ensures that the model effectively captures relevant features from both domains.

Capsule Networks: Capsule networks preserve the hierarchical relationships between features, which can be particularly useful when dealing with the complex and multidimensional nature of HSI data. They can enhance the model’s ability to understand spatial hierarchies and the orientation of spectral features.

3. Lightweight Models and Compression Techniques

Due to the large size and complexity of HSI data, DNN models can become computationally expensive to train and deploy. Techniques to reduce the model size and complexity are essential for making them practical, especially in resource-constrained environments.

Knowledge Distillation: This technique involves training a smaller “student” model to mimic the predictions of a larger “teacher” model. The student model, while being less complex, learns to approximate the teacher’s performance, making it more efficient for processing HSI data.

Model Compression: Compression techniques such as quantization, pruning, and low-rank approximation can significantly reduce the size of a DNN without drastically affecting its performance. For instance, pruning removes less important neurons or filters, reducing computational cost and memory usage.

Lightweight Architectures: Adapting lightweight models like MobileNet or EfficientNet for HSI data processing can significantly improve efficiency. These architectures are designed to be computationally efficient and can be customized to handle the higher dimensionality of HSI data.

4. Data Augmentation and Regularization

HSI datasets are often smaller compared to RGB datasets, which increases the risk of overfitting. Data augmentation and regularization are crucial for improving the generalization capability of DNN models.

Data Augmentation: Techniques such as spectral variation, spatial transformations, and noise injection can increase the diversity of the training data, helping the model to generalize better to unseen data. This is particularly important in HSI, where the data can be highly variable depending on the environmental conditions.

Regularization Techniques: Methods like dropout, L2 regularization, and spectral regularization are important for preventing overfitting. Regularization helps the model to remain robust and generalize well, even when trained on high-dimensional HSI data.

5. Multi-Scale Learning

HSI data often contains features at multiple spatial or spectral scales, making multi-scale learning an effective approach.

Multi-Scale CNNs: These networks use filters of different sizes to capture features at various scales, allowing the model to understand both fine-grained details and broader patterns in the HSI data. This is crucial for accurately capturing the complex relationships inherent in HSI data.

Attention Mechanisms: Attention mechanisms enable the model to focus on the most relevant spectral bands or spatial regions, improving both efficiency and accuracy. By selectively focusing on important features, attention mechanisms help the model to prioritize the most critical information, reducing computational overhead.

Conclusion

Optimizing DNN models for processing HSI data is essential due to the high dimensionality and complexity of the data. Through dimensionality reduction, custom architectures, model compression, data augmentation, regularization, and multi-scale learning, it is possible to develop efficient and effective models for HSI applications. These optimizations not only make HSI processing more practical but also open up new possibilities for deploying such models in real-world scenarios, including mobile and edge computing environments. As technology continues to advance, the integration of HSI with optimized DNNs will likely play a crucial role in a wide range of fields, from agriculture and environmental monitoring to healthcare and beyond.